|

|

Post by Coolverine on Mar 12, 2024 10:08:02 GMT -5

Oh yeah this mouse is pretty old.

|

|

|

|

Post by ForRealTho on Mar 17, 2024 17:40:39 GMT -5

|

|

|

|

Post by ForRealTho on Mar 20, 2024 14:59:37 GMT -5

Cables matter.

I haven't had a proper backup harddrive in years. I have an external encloser with a 1 tb NVME SSD inside it.

Since with my new PC I got a 2 tb drive I figured time to upgrade it, when I ordered an SSD it came with another free enclosure a while ago.

My motherboard is a B board and only has 1 USBC port(lame). So I hooked up my current external drive via USB-C to a dock I had plugged into the USB-C port, and then I plugged the other 2TB SSD enclosure with a USBC to USBA cable and plugged it into the fast orange USBA plugs.

I started the copy and it was going at a grand total of 35 megs a second.......................on a computer from 2024. Wtf. I was out the door so I didn't have time to mess with it.

I got home and still copying at 35 megs a second.

Since Windows 11 lets you pause a file copy I hit pause. I changed things around so the 2nd enclosure with the 2TB was using a USBC to UBC cable and plugged directly into the motherboard, no dock. Then my original enclosure I hooked up the orange USBA port using the cable it came with which I know is capable of high speed.

Unpaused the file transfer and it shot up to 90 megs right away.

I kinda want to upgrade my motherboard just to get more ports and overclocking ability, I just don't know if its worth it.

|

|

|

|

Post by ForRealTho on Mar 27, 2024 21:12:53 GMT -5

Finally got around to doing a little overclocking on the 4080 Super. Ran the MSI Afterburner overclocking scanner, running 2865 core rock solid. Also did +1,000mhz on RAM so at 12,500 RAM now.

I should be able to do 3,000 core easy if I put the voltage to 1.1, however for some reason Afterburner won't let me. I already tried different voltage access settings in Afterburner, not sure what I am doing wrong.

Other people running 4080S are able to set the voltage manually. I need to do more digging.

|

|

|

|

Post by Coolverine on Mar 28, 2024 17:34:19 GMT -5

I use EVGA Precision X, haven't tried overclocking on anything since a long time ago though. I heard the 4080 is very power efficient.

|

|

|

|

Post by Coolverine on Mar 29, 2024 0:56:23 GMT -5

*edit* Meant to put this in the "what are ye currently playing" thread, moving it there.

Been actually thinking about getting a Ryzen 7 5800X3D or even a Ryzen 9 something, and some faster RAM.

I think they even made a Ryzen 7 5700X3D, but I think for the 3080 I might need 5800X or better. It's also nice to have the larger cache and faster speed, really helps in huge games like Jedi Survivor.

|

|

|

|

Post by Emig5m on Mar 30, 2024 1:45:07 GMT -5

*edit* Meant to put this in the "what are ye currently playing" thread, moving it there.

Been actually thinking about getting a Ryzen 7 5800X3D or even a Ryzen 9 something, and some faster RAM.

I think they even made a Ryzen 7 5700X3D, but I think for the 3080 I might need 5800X or better. It's also nice to have the larger cache and faster speed, really helps in huge games like Jedi Survivor.

My i9 10850k cpu usage bounces around from 22% to 32% in Horizon Forbidden West most of the time but my 3080Ti OC is at 99% usage all the time but I play at 4k. I don't think a faster CPU would do anything for me. It appears based on the usage percentage that I need more GPU for my CPU since it seems that the CPU is sitting around bored waiting on the GPU to render frames lol. My CPU be singing: Wake Me Up Before You Go-Go....

|

|

|

|

Post by Emig5m on Mar 30, 2024 1:48:08 GMT -5

But on the other hand...my GPU be singin'....

|

|

|

|

Post by ForRealTho on Mar 30, 2024 5:03:29 GMT -5

My i9 10850k cpu usage bounces around from 22% to 32% in Horizon Forbidden West most of the time but my 3080Ti OC is at 99% usage all the time but I play at 4k. I don't think a faster CPU would do anything for me. It appears based on the usage percentage that I need more GPU for my CPU since it seems that the CPU is sitting around bored waiting on the GPU to render frames lol. My CPU be singing: Wake Me Up Before You Go-Go.... Going from a 10th gen to a 14th gen Intel/Latest Ryzen makes a huge difference in the 1% lows. Its massive. I went from a 10th gen to a 14th gen and in for example Dishonored 2/Dishonored DOTO it ran great on the 10th gen average fps but on the 14th gen it never drops below 120 fps, it just feels so fluid and responsive. Obviously if you are playing mostly single player games it doesn't matter as much. |

|

|

|

Post by ForRealTho on Mar 30, 2024 13:07:53 GMT -5

Turns out the reason I couldn't change the voltage on my 4080S is because the latest release version of Afterburner doesn't support the 4080S, only the beta branch. At least it wasn't because I didn't check the right box.

|

|

|

|

Post by ForRealTho on Mar 30, 2024 22:44:08 GMT -5

So success. I got the 4080S running at 1.1 voltage all the time using the beta version of Afterburner.

Overclocked the RAM 1500 mhz so running at 13,000 mhz now, and the clock going between 3000-3015. Not sure how to make it stay at 3000 and never move, need to try more things.

Ran a benchmark in FarCry 5........and the lows were the same OC and no OC. I guess FarCry 5 is too old for even 1440p to make a difference.

If I was running 4k or doing like 200% resolution scaling maybe there would be a difference.

I finished a couple rounds of Killing Floor 2 with the OC and no crashes or artifacts, KF2 is locked at 150 FPS max tho so its not the best text.

I turned "Nvidia Flex" on which says "Warning, this is a very performance demanding features, a minimum of a Nvidia 980 is required!". First time I have ever seen that feature not tank framerates........if its working right. I seem to remember it producing more gore then it does.

Need to test CyberPunk 2077

|

|

|

|

Post by ForRealTho on Mar 30, 2024 22:52:32 GMT -5

..........and nope. CyberPunk crashed at the title screen using those settings. Need to back down and try again lol.

Also, I don't know how but my PC got set to Balanced power mode. I had it set on High Performance day 1. I know I checked it at least once since then and it was still on High Performance.

No idea how that happened.

|

|

|

|

Post by Emig5m on Apr 1, 2024 21:47:50 GMT -5

..........and nope. CyberPunk crashed at the title screen using those settings. Need to back down and try again lol. Also, I don't know how but my PC got set to Balanced power mode. I had it set on High Performance day 1. I know I checked it at least once since then and it was still on High Performance. No idea how that happened. Probably windows update. |

|

|

|

Post by ForRealTho on Apr 8, 2024 18:41:53 GMT -5

|

|

|

|

Post by Coolverine on Apr 9, 2024 23:12:20 GMT -5

Kinda glad I waited then, if that is true.

|

|

|

|

Post by ForRealTho on May 5, 2024 14:44:56 GMT -5

Well shit. I have been using HDR1000 since I got this monitor since I play in a dark room with blackout curtains when I play games, but this guy swears HDR1000 is a waste. I just switched to HDR400, going to need to test some games

|

|

|

|

Post by ForRealTho on May 19, 2024 20:04:29 GMT -5

I almost waited for the WOLED 4k LG that switches to 1080p 480hz for competitive gaming. Glad I didn't now. Looks like QD-OLED is just plain better

|

|

|

|

Post by ForRealTho on Jul 2, 2024 20:43:26 GMT -5

I wasn't planning on doing any "upgrading". My 2020 Eluktronics had other plans.

That laptop was a beast for all of COVID but has always had cooling problems. They decided to make it thin and light like an Air with a 10th gen intel...bad idea. Hence why I repasted it so many times with liquid metal.

Went to an audio meetup last week, 4 hour drive there. I haven't really touched my laptop much since I got my desktop. I got everything setup for the meetup.

As I was packing to leave I thought "Maybe I should bring my Macbook Air and external drive just in case?"

Naw, what are the chances of my laptop dying on this trip?

Get to my hotel. Take my laptop out of my bag. Won't turn on. Fuck. I didn't even bring a screwdriver. I got a small screwdriver from the hotel. Got the back of the laptop off. Tightened up the screws, popped the battery, CMOS, everything you would try to fix a laptop.

No dice. Its dead.

Luckily my hotel was a 10 minute drive from a Microcenter. I have heard of Microcenter but never been. I headed over. They had an amazing gaming laptop for $999. 4070, 32 gigs of RAM, 13th gen intel......but it was 15 inches.

I passed. Ended up getting a 17" Acer with an AMD chip and a 4060. I already own a desktop with a 4080 Super. This is my travel/backup laptop so I don't need it to be top of the line.

Took the SSD out of my dead Eluktronics and put it in the Acer, get everything all setup for the meetup.

Played around with it a bit. Its only 1080p so in Cyberpunk I was able to get 150 fps no problem, my god OLED has ruined me tho. The 165 hz panel looked fucking HORRIBLE to me now, no HDR, no amazing blacks. OLED changes how you see PC gaming for real.

Also it has a button to change modes just like my Eluktronics including a beast/turbo mode.

Going from 14nm to 4nm is beyond ridiculous. The AMD chip runs so cool. Its 8 core16 thread just like my old Intel but holy fucking does it run cool. Never seen it go over 65c even under full load.

The 4060 is like 10% faster then the 2070 Super my old laptop had but again it uses WAY less power and hence way less heat.

Nice little machine.

|

|

|

|

Post by Coolverine on Jul 2, 2024 21:34:44 GMT -5

I thought about the 4060 and 4070, but the one thing that steered me away was that they only have a 128-bit and 192-bit memory bus (respectively), even the X070's before had 256-bit mem bus and the X060's had 192.

Don't know if may Nvidia made it to where it still works good at a much lower bandwidth, but in my experience having higher memory bandwidth is preferable.

|

|

|

|

Post by ForRealTho on Jul 3, 2024 7:51:55 GMT -5

I thought about the 4060 and 4070, but the one thing that steered me away was that they only have a 128-bit and 192-bit memory bus (respectively), even the X070's before had 256-bit mem bus and the X060's had 192. Don't know if may Nvidia made it to where it still works good at a much lower bandwidth, but in my experience having higher memory bandwidth is preferable. For sure it is, but with DLSS and Frame Generation its less of a big deal. Also I left texture quality at Quality for years and LOD Bias at Clamp. For DLSS it's better to go High Quality and Allow Bias. It lets DLSS work better. |

|

|

|

Post by ForRealTho on Jul 3, 2024 9:28:26 GMT -5

|

|

|

|

Post by sj on Jul 5, 2024 11:15:56 GMT -5

The difference is exaggerated in a dark room. If u prefer a dark room and are stuck with an IPS panel, you can get those LED strip lights with adhesive and attach them to the back of your monitor, which helps mitigate the effect of IPS glow. Of course, if u have the money, OLED is def superior. Sony's early flat-panel monitors (4:3 aspect ratio) didn't have this backlight bleed & uniformity issues (not to this horrible degree, at least) and the colors were quite good. They were expensive tho, ppl didn't buy them and so Sony left the market. VA panels have less backlight bleed and better contrast than IPS, but the viewing angles are bad w/ shifts in brightness & clarity depending on viewing angle. VA is particularly bad with PC monitors, as the closer you sit to the screen, the worse the effect becomes. That is, move your seating position slightly to the right or left, and you see big shifts in brightness & clarity. But even sitting directly in front of the (VA) monitor and holding perfectly still, the text clarity just isn't as good as IPS or OLED. It's almost as bad as TN panels, as far as viewing angle issues. |

|

|

|

Post by Cop on Jul 9, 2024 9:41:24 GMT -5

you can get those LED strip lights with adhesive and attach them to the back of your monitor Heh, I did that with my TV. First thing I did however when I swtched to LCD from CRT in a dark room was to buy LED strips and use them to light the insides of the built-in cupboards on both sides of the screen of which I'd removed the doors, which already worked wonders. This was still pretty early in that technology, so instead of being a long flexible strip like it is now, it used 20-30cm solid elements you could click together to make them longer and it only had a yellowish white light with no dimming capabilities. Was reasonably expensive back then too, but both are still working 100% so I guess it was worth it. A few years ago I bought one of those-RGB strips for one of the kids because it cost next to nothing and I was surprised at how well it worked, I went and bought one for me as well. Eventually that made it to the back of my TV, where I matched the colour to the OG strips as well as I could. |

|

|

|

Post by sj on Jul 10, 2024 9:01:38 GMT -5

I almost waited for the WOLED 4k LG that switches to 1080p 480hz for competitive gaming. Glad I didn't now. Looks like QD-OLED is just plain better Glossy monitors pretty much require a dark room, unless you enjoy watching your own reflection while using your PC. |

|

|

|

Post by ForRealTho on Jul 10, 2024 12:26:04 GMT -5

Glossy monitors pretty much require a dark room, unless you enjoy watching your own reflection while using your PC. I have black blinds in every room I game in, have since I was a kid. During the summer a lot of light to block out. Couldn't imagine having a bright light on behind my head playing games. |

|

|

|

Post by ForRealTho on Jul 12, 2024 8:05:41 GMT -5

This is cool. My current monitor is on the far right. I remember back in the day someone complaining their weren't enough matte monitors and how glossy ruins the image, some big game developer I don't recall who. I guess matte isn't some magic cure for anything.

OLED HDR has ruined me. Even "fake" HDR like the RTX HDR I was using in Amnesia The Bunker. Any fire in the game looked amazing

|

|

|

|

Post by ForRealTho on Jul 12, 2024 20:48:20 GMT -5

Looks like I posted the wrong video above, replaced it

|

|

|

|

Post by ForRealTho on Jul 13, 2024 16:51:53 GMT -5

|

|

|

|

Post by ForRealTho on Jul 16, 2024 10:49:23 GMT -5

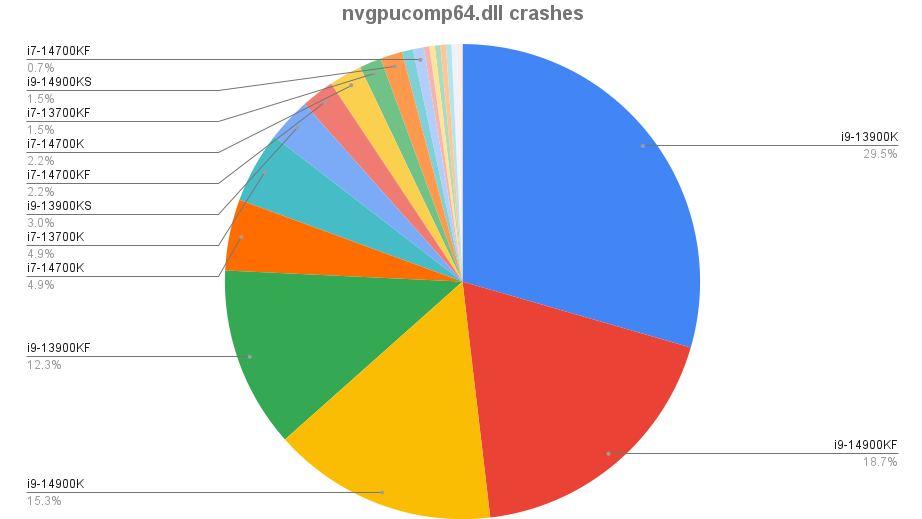

I thought about getting a i9-14900kf / 4090 desktop but the cheapest one I found was like $3099 with some pushing $4000 Glad I got the i7-14700kf:  The amount of crashing and such with the i9s is absolutely ridiculous. I would be pissed if I paid that much for a PC only to have it crash and crash and have Intel deny and deny |

|

|

|

Post by ForRealTho on Jul 16, 2024 10:57:05 GMT -5

Skipping the 4090 for the 4080S got me thinking. When was the last time I actually had the best videocard? I typically skipped the best and went second best. When Doom 3 launched I got the 6800 GT, the GTX(I think) was the only card in the world with 512 megs of RAM that would run Ultra settings. Being in college I got the 6800 GT instead. Then after that I got the 8800 GTS, which was one step below the 8800 GTX(I think)

The last time I got the absolute best was the Radeon 9700 Pro. That is on the list of best videocards of all time. I was actually using a Radeon 8500 before that. The 8500 was a solid card but ATI made some hard choices that led to some shortcomings. Back in those days the Nvidia fans would say "ATI drivers are so bad you can't even play games" but that wasn't my experience. I did have a couple of problems here and there but nothing ridiculous.

I got the Radeon 9700 Pro at Best Buy, first time I got a card at Best Buy instead of CompUSA(RIP). It was buried behind a bunch of other cards so I got the last one in the store. Amazing card for its time.

|

|