|

|

Post by sj on Oct 2, 2024 22:22:08 GMT -5

Also, some newer OLED's auto-dim the screen when the temps get too high. I think the latest Samsung OLED monitor has that, as well as the LG C4 OLED TV's.

I hate auto-dimming. It's particularly annoying when the part/scene in a game or movie goes darker (as a normal part of the game or movie). When that happens, at times (when the TV/monitor dims itself during a dark scene), u can barely see any detail. That's why I bought a LG C3 (55") QD-OLED TV just before it was phased out and at its cheapest price ($1099 at Best Buy). It's not quite as bright overall (but not significantly less bright either) as the newer LG C4 model. However, (unlike C4) the C3 stays the same brightness (i.e. no auto-dimming) and doesn't have a green tinge (when viewing at an angle) - an issue rtings.com reported with the C4.

Interestingly, LG's top-end model, the G4, wasn't reported to have the green tinge from wide viewing angles. It's almost as if LG engineered this flaw into C4 to give people a reason to go for their over-priced G4.. In the prior (C3/G3) generation, pro reviews said that the C3 was so close to G3, just go with C3 and save a ton.

|

|

|

|

Post by Coolverine on Oct 3, 2024 7:29:06 GMT -5

Well what stood out to me was the fact that it says it can "reduce" the risk of burn-in, not completely avoid it. I leave my IPS monitor on all the time and have never noticed any burn-in, and sometimes it stays on a solid screen for a while. Have seen temporary burn-in on my secondary monitor and also on my TV. Both are pretty old now though, the TV also has a few dark spots for some reason but still looks good.

Remember plasma TV's?

|

|

|

|

Post by sj on Oct 3, 2024 10:27:32 GMT -5

Yes.. burn-in was a risk on those too.

Plasma TV's had 480Hz refresh way back when, before gpu's were capable of frames anywhere close to that. Panasonic plasmas were the price/performance leader, but they had some relatively minor color accuracy issues (with oranges, if i recall correctly). Pioneer plasma were top rated, but too expensive for most ppl.

Regardless, for some ppl (like myself), they use their TV/monitor for entertainment purposes for only 2-4 hours/day on average. So the odds of burn-in (within, say, five years) should be pretty low, in these cases.

I think burn-in is only as common as it is because many ppl are glued to their TV's & PC's too long (and/or they don't have separate IPS's specifically for work/productivity use). They think they should just have 1 TV or 1 monitor for everything, and use it all the time.

|

|

|

|

Post by ForRealTho on Oct 6, 2024 21:51:46 GMT -5

Have an IPS monitor now and it looks great The thing is once you see an IPS(or any other old school monitor) next to an OLED it looks terrible. No offensive to your IPS, I am sure its good but the tech just can't compete. I'm subscribed to an OLED gaming subreddit, and people buy their OLED and it makes their IPS next to it look so bad they have to buy another OLED because they just don't go together. Earlier tonight I played a bit more of old school Doom 3(CST source port www.moddb.com/mods/cstdoom3 ). I only finished it once back in the day when I was still on CRT. Looking at Doom 3 was painful on all other monitors up till I got my OLED. In pitch black rooms the screen is black. As in the pixels are off. Then I got RTX HDR working with it and since the game is capped at 62 fps I am using Lossless Scaling frame generation. The contrast of the HDR and and the dark is amazing. OLED has completely ruined me for all other monitors. The first time games have looked correct since CRTs died out. |

|

|

|

Post by Coolverine on Oct 7, 2024 2:15:01 GMT -5

^Am anticipating that, another reason I haven't made the change yet. Even compared to my old monitor (Asus PB277Q which is a TN, and still in use as a 2ndary monitor), the IPS looks way sharper/clearer and it especially stands out on text and other fine detail. Color-wise, they are about equal but the IPS is a little heavier on green. Could probably adjust it some.

Also have an Asus VE258Q, outstanding colors on it but it stopped working after nearly a decade. Been meaning to take it to a repair shop and see if they can bring it back to life, but still haven't found the time.

If I get an OLED monitor, would probably go for 27" and 2560x1440 like my current monitors, wouldn't mind trying that Nvidia Surround thing.

|

|

|

|

Post by sj on Oct 7, 2024 18:38:46 GMT -5

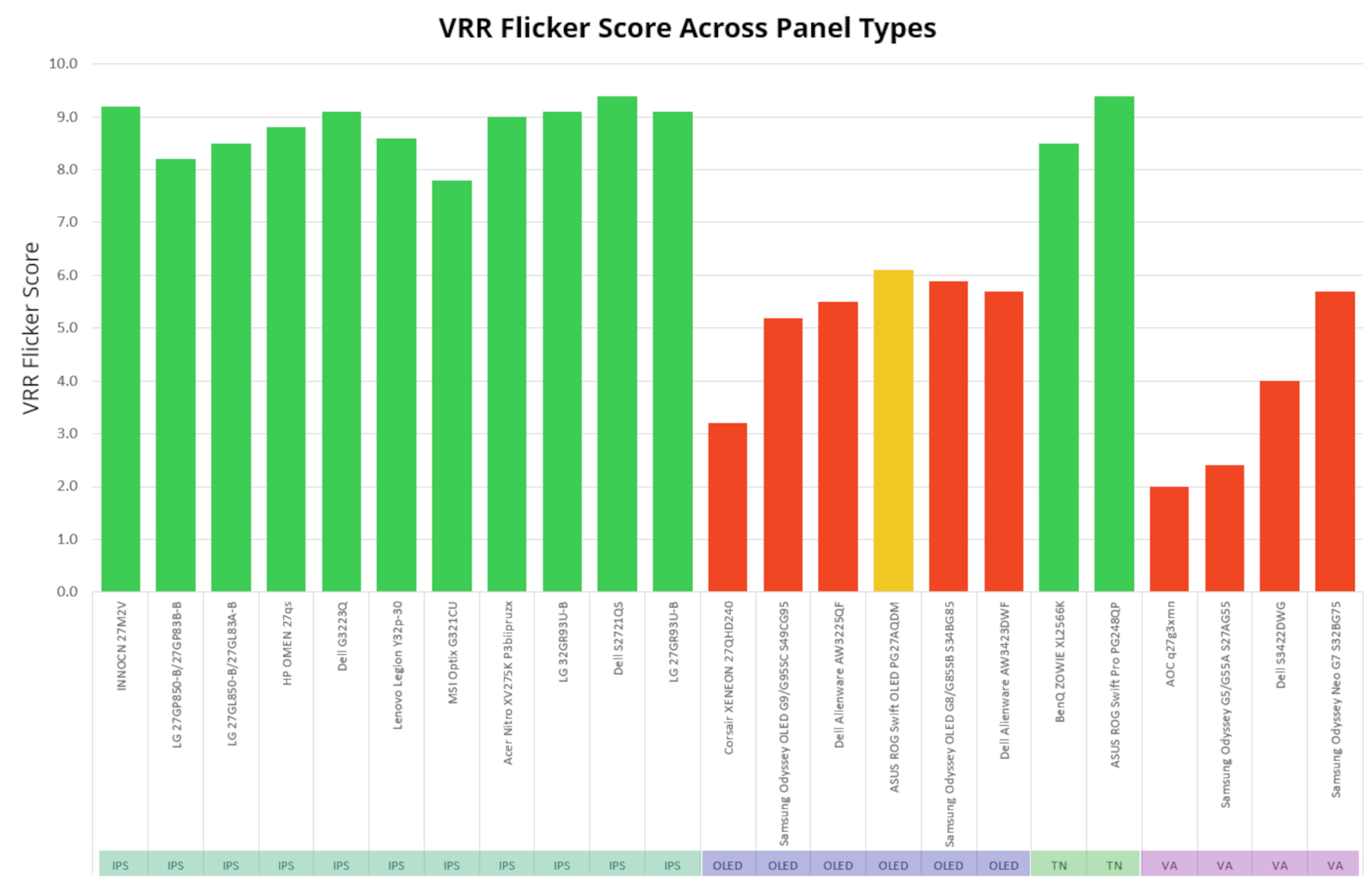

Make sure you have a decently powerful gaming PC or set the resolution low enough (for consistent frames) when you do.. they say that it's swings in framerates that cause the flickering on OLED and VA monitors. Notice how IPS and TN are nearly immune to the flicker problem.  www.rtings.com/monitor/learn/research/vrr-flicker www.rtings.com/monitor/learn/research/vrr-flickerWhat's odd is how the cheaper (in the 32" 4K category) Chinese brand OLED is the only one that scores in the green (7.8 out of 10) on VRR flicker. Maybe MSI engineers couldn't get the VRR flicker down correctly (as an unlisted, shadow feature - meant to help the continous consumer product upgrade cycle). shhh, shhh.  www.rtings.com/monitor/reviews/msi/mpg-321urx-qd-oled#test_1369 www.rtings.com/monitor/reviews/msi/mpg-321urx-qd-oled#test_1369 |

|

|

|

Post by ForRealTho on Oct 7, 2024 18:49:14 GMT -5

The one downside to OLED is the VRR flicker..................so I just disable G-Sync. Problem solved. If I played Counter-Strike professionally I might need G-Sync but the laptop I used for 4 years didn't have G-Sync support so I got used to not having it.

|

|

|

|

Post by sj on Oct 8, 2024 14:54:00 GMT -5

Easy for you to say because you have a blazing fast rig that can maintain high frames in most situations. They said VRR flicker isn't very noticeable on a system like yours (G-Sync on or off). You see, a system like yours doesn't require gimmicks (as would lesser systems) to smooth out nonexistent low frames.

The irony, which rtings.com pointed out, is that features like G-Sync were made for smoothing out frames on systems that needed it and, therefore, OLED breaks the usefulness of this feature (only really needed on lesser systems, not like yours). On a lesser system, problem (not achieving buttery smooth gameplay) is not so easily solved by switching off a feature intended to mask the choppiness of low frames (something a weaker setup can't necessarily fix by turning off features meant to improve visuals on said weaker systems), if that makes sense. I've read a couple of gaming articles that said the best option for no screen tear or flicker on slower rigs (or mid-tier rig w/ AAA game and high quality settings), and with OLED monitor is just go back to using V-Sync. Of course, that introduces some lag, but again, they're talking about the best solution for a lower tier gaming setup w/ OLED.

|

|

|

|

Post by ForRealTho on Oct 8, 2024 21:29:56 GMT -5

My last setup was a 240hz screen with a 2070 Super. If you turn V-Sync off its rare to see screen tearing at 240hz. Maybe slightly at the edge of the screen. At 360hz its even less noticeable.

I didn't have G-sync at all for 4 years so I don't mind just forcing G-Sync/V-Sync off. At 360hz both features are kinda eh.

Blurbusters.com are the experts on monitors, right now we have 500 hz screens as the fastest, within 5-10 years 1,000 hz monitors should be out. At 1,000 hz V-Sync/G-Sync On or Off all converges and the screen is refreshing so fast they are all the same according to blurbusters.

|

|

|

|

Post by sj on Oct 9, 2024 13:10:34 GMT -5

^Am anticipating that, another reason I haven't made the change yet. Even compared to my old monitor (Asus PB277Q which is a TN, and still in use as a 2ndary monitor), the IPS looks way sharper/clearer and it especially stands out on text and other fine detail. Color-wise, they are about equal but the IPS is a little heavier on green. Could probably adjust it some. Also have an Asus VE258Q, outstanding colors on it but it stopped working after nearly a decade. Been meaning to take it to a repair shop and see if they can bring it back to life, but still haven't found the time. If I get an OLED monitor, would probably go for 27" and 2560x1440 like my current monitors, wouldn't mind trying that Nvidia Surround thing. If you're that worried about OLED burn-in and the OLED price tag, but are ok with a highly rated VA panel gaming monitor, Samsung has a good price on this one. Sale ends today (possibly?), since I see "flash sale" posted on their site. www.samsung.com/us/computing/monitors/gaming/27-odyssey-g65b-gaming-hub-curved-gaming-monitor-ls27bg652enxgo/It's also at BestBuy for the same price. www.bestbuy.com/site/samsung-odyssey-g6-27-curved-qhd-freesync-premium-pro-smart-240hz-1ms-gaming-monitor-with-hdr600-displayport-hdmi-usb-3-0-black/6522483.p?skuId=6522483I'm not that crazy about curved monitors, but imo, I think this model's acceptable for gaming given the other features (high refresh & response, hdmi 2.1, decent contrast compared to IPS) & price.. think I'll pick one of these up for console gaming (even the PS5/Pro scales its 4K game modes to QHD resolution, and with improved detail). |

|

|

|

Post by sj on Oct 9, 2024 15:58:35 GMT -5

I did preorder the PS5 Pro the other week (which ships early November) and the (above) Samsung monitor today (seems unlikely it's going to get much cheaper than $400 off retail price, so might as well get it now). I'm holding off buying a new PC (probably a laptop w/ 5070 or 5080 laptop gpu) when the next gen cpu's and gpu's are out.

|

|

|

|

Post by ForRealTho on Oct 9, 2024 20:11:09 GMT -5

I'll never own another console, unless its some kind of crazy retro thing. I'd rather put the money into my PC

|

|

|

|

Post by sj on Oct 9, 2024 20:35:42 GMT -5

I'll never own another console, unless its some kind of crazy retro thing. I'd rather put the money into my PC I already own a large backlog of PS4 & PS5 games and the hardware isn't that expensive, so I might as well get one. I get it tho. The main advantage to a Playstation console is you get access to some first-party games a year or two before they make it to PC. Well, there's also PS VR2.. Gran Turismo 7 is said to look amazing in VR and 2-1/2 years after release, still no official release on PC. Sony's VR2 is expensive and hasn't really taken off like they had hoped. I think they released an update to make their VR2 hardware compatible with PC (meaning you can play some PC VR titles on your Playstation VR2), so the relative few people who did buy one won't feel as ripped (for Sony's small library of VR games) at least. If PS6 isn't backwards compatible, I won't be getting one. But rumors are that Sony is working with AMD again for PS6 and pushing for full backwards compatibility. Also, Nintendo is supposedly on the backwards compatibility train with their next console and that's unlike Nintendo. |

|

|

|

Post by sj on Oct 9, 2024 20:48:52 GMT -5

Of course, gaming is all about the games. The reason PS5 and Switch exist is because Sony and Nintendo make really great first party games. Microsoft's Xbox is in a distant last place because they don't pump out as many great games and their first party games usually get direct/simultaneous releases on PC anyway.

|

|

|

|

Post by sj on Oct 9, 2024 21:58:52 GMT -5

The biggest con to PC (desktop) gaming is the bigger power draw. You can play Indie & Retro games on a console or laptop (just as well), and use a fraction of the power.. which is another reason, besides games backlog & first party titles early access, I'm not opposed to owning one of each type of hardware.

Eventually, we'll all be streaming our games (to pairs of sunglasses or contact lenses). This could take 20+ years though, since the US is so slow upgrading its internet infrastructure (to bring the latency low enough to satisfy most gamers and get them to adopt games as a service/streaming).

|

|

|

|

Post by sj on Oct 9, 2024 22:12:45 GMT -5

The PS5 Pro preorders are sold out already. I'm not that surprised. As great as PC gaming is, it's alot more expensive than console gaming. Inflation has been hitting people hard in the wallet, so naturally more ppl will shift to less expensive choices.

I'd like to get a new PC next year regardless, since there are games on PC not released on other platforms (Satisfactory, for example). If there's a bad recession though, then nope, since it doesn't make sense spending money on hobbies during times of mass layoffs.

|

|

|

|

Post by ForRealTho on Oct 10, 2024 22:32:36 GMT -5

The biggest con to PC (desktop) gaming is the bigger power draw. This right here. I have MSI Afterburner and my powerbill to remind me. On my laptop the GPU capped out at 115 watts and the CPU 45-65watts. Now 320 watts on the GPU alone. Ever since I got my full PC my electric company has been sending me emails "You are using 68% more power compared to this time last year" |

|

|

|

Post by Coolverine on Oct 11, 2024 0:12:36 GMT -5

I actually have framerate capped at 60fps in most games just so my PC uses less power and generates less heat. When it gets cold, I'll just uncap the framerate and turn off the central heater.  |

|

|

|

Post by sj on Oct 11, 2024 2:10:44 GMT -5

yeah, it's a trade-off. Laptops save power and lower your energy bill. However, they also have a shorter shelf life due to integrated batteries, etc (trying to force you into a more rapid upgrade/hardware replacement cycle) and built-in shit that seems to cause alot more blue screens (BIOS and software - at least with Dell) to try to get you to pay for their repair services.

Interesting video if you care about OS security. He has me convinced that I should turn my old Dell XPS15 laptop into a Linux machine. The XPS15 died several months ago, and I've purchased replacement battery and SSD (which both failed around the same time).. good thing it's one of their older laptops which allowed replacing the battery.

His videos are kinda long though, so I've only finished watching the first segment and plan to watch the rest later.

|

|

|

|

Post by sj on Oct 11, 2024 10:18:22 GMT -5

I've received notices from Microsoft & Dell that the old XPS15 isn't supported by Win11 and (security updates) for Win10 is being dropped next year.. although, it's an older laptop and not stellar, the specs aren't so abysmal that Win11 shouldn't support it. It has an i7 quad core (4 physical cores, 8 hyper-threaded) 2+GHz cpu, Nivida 1050 gpu w/ 4GB video memory, low-latency 64GB system RAM (I upgraded the RAM myself), Nvme SSD, etc. Not great for gaming, but certainly spec'd decent enough it should run recent OS's. So why no Win11 support is beyond me, as MS don't provide any sort of technical explanation. My guess - it doesn't have the right spy chip to be supported by Win11 and its AI 24/7 recording & recall features. That's right, the (above) video talks about how MS changed their plan to add this spy tech (via Windows update) to all consumer-based Win11 machines (not just the Co-pilot + AI PCs, as was their former promise). I believe corporate/business can get special licensing for recent versions of Win11 Pro or Win Server without the spy tech. If not, I could see many more businesses gradually shifting to Linux platforms.

In the video, he also mentions a special version of Win10 Pro (he hints you can acquire it through shady means - i.e. reddit search to find out) that's supported with security updates through 2032. However, this version won't get all the latest DirectX updates and some games won't run on it. MS will only be providing security updates for it (further out 'til 2032, past the normal 2025 cut-off of Win10 consumer/home editions).

|

|

|

|

Post by sj on Oct 11, 2024 12:04:34 GMT -5

Even if you think "I'm doing nothing wrong - so who cares" and you trust Microsoft (and whoever they sell your info to) and the US Federal govt (they don't even hide that they restarted the spying on US citizens, a few months ago - formerly Patriot Act) with 'innocent' data like your personal medical, financial, passwords, ect.. even then.. do you trust the CCP/China with all of that data? You know, China still manufacturers most computer hardware. It's conceivable that they could tap into Microsoft's new Win11 record & recall features at the hardware level, since they make the hardware which requires a thorough understanding of the OS to make it all come together and work as intended. If the opposing argument becomes 'oh, but the government would crack down on MS if that were a concern.' Do you really believe all these old (70+ y/o) people in Congress (or the handful of younger ones, like AOC, Kamala) understand technology?  |

|

|

|

Post by sj on Oct 11, 2024 12:18:59 GMT -5

I actually have framerate capped at 60fps in most games just so my PC uses less power and generates less heat. When it gets cold, I'll just uncap the framerate and turn off the central heater.  60fps is perfectly fine for most games. I'd wager, for most people, they only "feel" the benefit of higher frames in fast-twitch games like multiplayer online first-person shooters. |

|

|

|

Post by sj on Oct 11, 2024 12:34:58 GMT -5

Although, you might be in the minority by choosing 60fps. idk the stats for PC gamers, but i've heard something to that effect regarding PS5 gamers.

I've heard through YT game channels that, at the last Playstation Showcase, Sony revealed that the vast majority of PS5 gamers always choose performance mode (lower detail, high frames) over quality mode (which can be 30fps capped on base PS5 in the more demanding AAA titles). This was one of their marketing arguments for PS5 Pro, so that players could play more of their existing libraries of games with higher detail at 60fps or 120fps.

|

|

|

|

Post by Coolverine on Oct 11, 2024 15:25:58 GMT -5

My main monitor has 144hz refresh (overclockable to 165hz) and there is absolutely a difference in 60fps vs more. My old monitor that is still in use as a secondary has a 75hz refresh rate, and even there I see a difference between 60fps and 75fps. Definitely able to react to things in the game better when framerate is smoother/faster, especially in fast-paced games

|

|

|

|

Post by sj on Oct 11, 2024 15:55:54 GMT -5

Acer ultrawide IPS was capable of overclock to 75 or 85Hz, and I noticed a minor improvement. This monitor had intermittent issue with image going to random splotches of colors and had to power it off/on to correct (not often enough to dump it immediately, but enough to be annoying).. something like once or twice every few days). But it was enough to put a bad taste in my mouth (a "lemon") to avoid Acer monitors from then on.

I also have an NEC CRT monitor (that I used in the 90's, maybe 'til early oughts), which was capable of at least 100Hz. It's a really great monitor (great colors, never an issue) and I've kept it in storage. I remember the 100Hz on CRT looking better (not just faster) than 60Hz. I was able to use it longer at 100Hz without getting a headache. I might set it up again some day for retro gaming on old consoles.

I've collected quite a number of original hardware, old school consoles and all of the Analogue FPGA consoles, including the pricey Nt Mini Noir (NES) v2 - the one where they fixed the cartridge slot issue. I think the only remaining OG hardware ones I'd need are the Sega Saturn, Sega Dreamcast, and NeoGeo. Then, possibly NEC TG-16/TurboDuo or some variant of the NEC consoles.

As much praise as CRT gets (and i agree, it was better than LCD/LED in most aspects), the eye-strain & sometimes headaches (if you were looking at one for several hours) were more of a problem for many ppl when using CRT's.

|

|

|

|

Post by sj on Oct 11, 2024 16:27:37 GMT -5

Oh, almost forgot the "MiSTer" which is widely known as the ultimate FPGA retro gaming device (it has FPGA cores for the most retro gaming consoles, retro gaming PC's, and arcade). It's expensive and assembly is DIY, so I've hesitated getting one just yet.

|

|

|

|

Post by ForRealTho on Oct 11, 2024 16:55:57 GMT -5

If anyone is interested in a old school CRT style monitor this project made its Kickstarter and then same and also wasn't a scam: www.checkmate1500plus.com/Also yes there is absolutely a huge difference between 60hz and above. The pro gaming scene were the first people to know this in the late 1990s. When I got into Counter-Strike I had just bought a monitor that did 1024x768 at 120hz. I got banned from multiple servers for "obvious cheating".......I wasn't cheating. When that monitor finally died and I replaced it with one that only did 85hz I noticed the difference. A gaming laptop I got in 2017 was 75 hz and overclocked to 100hz. Such a night and day difference from 60hz. I noticed in games right away if the Nvidia driver glitched out and it was only 60hz. I put 1,000+ hours in PUBG on that monitor and the 100hz made a huge difference. |

|

|

|

Post by sj on Oct 11, 2024 17:41:58 GMT -5

I bought a Sony IPS in 4:3 (in the early days of IPS) and it had a beautiful picture w/ vivid colors. Unfortunately, they couldn't compete on price and the lesser competition (at the time) remained in the monitor market.

I noticed Sony has a new monitor (designed for PS), but I don't think they're the (above the competition) quality Sony used to have.

|

|

|

|

Post by sj on Oct 11, 2024 17:48:38 GMT -5

You see a similar problem with emulation vs original hardware (or FPGA).

For example, NES Mini (which uses emulation), the PunchOut! game is substantially harder due to even slight input lag (vs original hardware + CRT, which suffered virtually zero input lag).

|

|

|

|

Post by sj on Oct 11, 2024 18:04:44 GMT -5

An interesting fact about this topic is Neuroscientists say that our nerve impulses and stimuli (as it travels to the brain) aren't even fast enough to detect the amount of input lag from our electrical devices. They think our brain/s anticipate what's next (1-2 seconds into the future) with a high degree of accuracy. So while the input lag is real in physical terms, it's only "real" in our minds due to our brain's incredible predictive capacity (and not due to our actual senses or how fast human nerve impulses travel). It "feels" like our senses because it's an illusion that it's our senses. Actually, this is how optical illusions work (your brain subconsciously predicting what happens next). This is why I said "feels" earlier because that's how to best describe it in Neuroscience, based on what's actually going on with our senses & how the mind processes visuals.

|

|